Have you ever thought about or looked at pictures of your ancestors and realized, “I have that trait too!” Just like your traits are in large part determined by random combinations of genes from your ancestry, the history behind your safety world view is probably largely the product of chance - for example, whether you studied Behavioral Psychology or Human Factors in college, which influential authors’ views you were exposed to, who your first supervisor was, or whether you worked in the petroleum, construction or aeronautical industry. Our “Safety World View” is built over time and dramatically impacts how we think about, analyze and strive to prevent accidents.

Linear View - Human Error

Let’s briefly look at two views - Linear and Systemic - not because they are the only possible ones, but because they have had and are currently having the greatest impact on the world of safety. The Linear View is integral in what is sometimes referred to as the “Person Approach,” exemplified by traditional Behavior Based Safety (BBS) that grew out of the work of B.F. Skinner and the application of his research to Applied Behavioral Analysis and Behavior Modification. Whether we have thought of it or not, much of the industrial world is operating on this “linear” theoretical framework. We attempt to understand events by identifying and addressing a single cause (antecedent) or distinct set of causes, which elicit unsafe actions (behaviors) that lead to an incident (consequences). This view impacts both how we try to change unwanted behavior and how we go about investigating incidents. This behaviorally focused view naturally leads us to conclude in many cases that Human Error is, or can be, THE root cause of the incident. In fact, it is routinely touted that, “research shows that human error is the cause of more than 90 percent of incidents.” We are also conditioned and “cognitively biased” to find this linear model so appealing. I use the word “conditioned” because it explains a lot of what happens in our daily lives, where situations are relatively clean and simple…..so we naturally extend this way of thinking to more complex worlds/situations where it is perhaps less appropriate. Additionally, because we view accidents after the fact, the well documented phenomenon of “hindsight bias” leads us to linearly trace the cause back to an individual, and since behavior is the core of our model, we have a strong tendency to stop there. The assumption is that human error (unsafe act) is a conscious, “free will” decision and is therefore driven by psychological functions such as complacency, lack of motivation, carelessness or other negative attributes. This leads to the also well-documented phenomenon of the Fundamental Attribution Error, whereby we have a tendency to attribute failure on the part of others to negative personal qualities such as inattention, lack of motivation, etc., thus leading to the assignment of causation and blame. This assignment of blame may feel warranted and even satisfying, but does not necessarily deal with the real “antecedents” that triggered the unsafe behavior in the first place. As Sidney Dekker stated, “If your explanation of an accident still relies on unmotivated people, you have more work to do."

Systemic View - Complexity

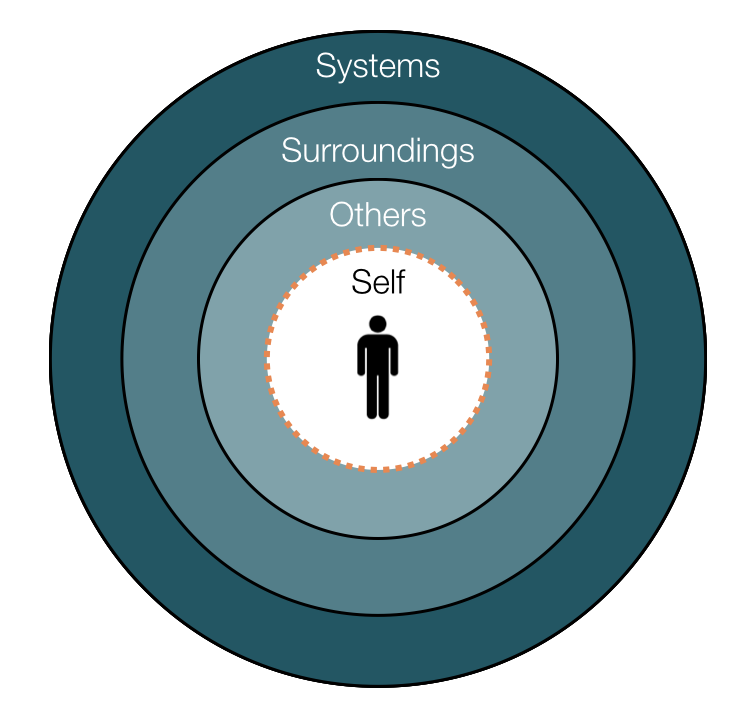

In reality, most of us work in complex environments which involve multiple interacting factors and systems, and the linear view has a difficult time dealing with this complexity. James Reason (1997) convincingly argued for the complex nature of work environments with his “Swiss Cheese” model of complexity. In his view, accidents are the result of active failures at the “sharp end” (where the work is actually done) and “latent conditions,” which include many organizational decisions at the “blunt end” (higher management) of the work process. Because barriers fail, there are times when the active failures and latent conditions align, allowing for an incident to occur. More recently Hollnagel (2004) has argued that active failures are a normal part of complex workplaces because of the requirement for individuals to adapt their performance to the constantly changing environment and the pressure to balance production and safety. As a result, accidents “emerge” as this adaptation occurs (Hollnagel refers to this adaptive process as the “Efficiency Thoroughness Trade Off”) . Dekker (2006) has recently added to this view the idea that this adaptation is normal and even “locally rational” to the individual committing the active failure because he/she is responding to a context that may not be apparent to those observing performance in the moment or investigating a resulting incident. Focusing only on the active failure as the result of “human error” is missing the real reasons that it occurs at all. Rather, understanding the complex context that is eliciting the decision to behave in an “unsafe” manner will provide more meaningful information. It is much easier to engineer the context than it is to engineer the person. While a person is involved in almost all incidents in some manner, human error is seldom the “sufficient” cause of the incident because of the complexity of the environment in which it occurs. Attempting to explain and prevent incidents from a simple linear viewpoint will almost always leave out contributory (and often non-obvious) factors that drove the decision in the first place and thus led to the incident.

Why Does it Matter?

Thinking of human error as a normal and adaptive component of complex workplace environments leads to a different approach to preventing the incidents that can emerge out of those environments. It requies that we gain an understanding of the many and often surprising contextual factors that can lead to the active failure in the first place. If we are going to engineer safer workplaces, we must start with something that does not look like engineering at all - namely, candid, informed and skillful conversations with and among people throughout the organization. These conversations should focus on determining the contextual factors that are driving the unsafe actions in the first place. It is only with this information that we can effectively eliminate what James Reason called “latent conditions” that are creating the contexts that elicit the unsafe action in the first place. Additionally, this information should be used in the moment to eliminate active failures and also allowed to flow to decision makers at the “blunt end”, so that the system can be engineered to maximize safety. Your safety world view really does matter.